Preparing a timeline for the development of software is a difficult problem – teams have proven time and time again that they’re tremendously bad at estimating how long it takes to develop software at any kind of scale.

Unfortunately, there’s a conflict between the need for financial planning and budgeting, and the unpredictable nature of software delivery – to the extent that agile software advocate frequently dismisses the accuracy and need for timelines and schedules.

This presents a difficult problem – organisations need to plan and budget, but their best technical people tell them that accurate planning is impossible.

We can make projections to try and close this gap.

Projections are common in financial planning and they serve the same purpose in software – they’re a forecast.

From http://www.entrepreneur.com/encyclopedia/financial-projections

Planning out and working on your company's financial projections each year could be one of the most important things you do for your business. The results--the formal projections--are often less important than the process itself. If nothing else, strategic planning allows you to "come up for air" from the daily problems of running the company, take stock of where your company is, and establish a clear course to follow.

Importantly

Variances from projections provide early warning of problems. And when variances occur, the plan can provide a framework for determining the financial impact and the effects of various corrective actions.

Projections are formal educated guesses – they don’t claim accuracy, but they intend to give a reasonable idea of progress.

With a well thought out projection, your team can continuously evaluate how their current progress lines up with their projection. As and when the real teams’ world progress and the projection start to diverge, they can realistically measure the difference between the two and adjust the projection accordingly.

Used in this way, projections are a useful tool for communicating with business stakeholders – suggesting a “best guess at when we might be doing this work” and a means of communicating changes in timelines divorced and abstracted away from the “coal face” of user stories, velocity, estimation and planning. Teams use their projections and actual progress to explain and adjust for changes in complexity, scope and delivery time.

If estimation and planning in software is hard, then projection is much harder – it’s “The Lord of the Rings” – a sprawling epic of fiction.

On a small scale, planning is predicated on the understanding that “similar things, with a similar team, take a similar amount of time” – and this generally works quite well.

People understand this quite intuitively – it’s the reason your plumber can tell you roughly how long it’ll take and how much it’ll cost to fit a bathroom. At a larger scale however, planning starts to fall apart. The more complex a job, the more nuance is involved and the more room to loose details and accuracy.

The challenge in putting together a projection is to bridge the gap between the small scale accuracy of planning features, and the long term strategic objectives of a product.

The Simplest Possible Process

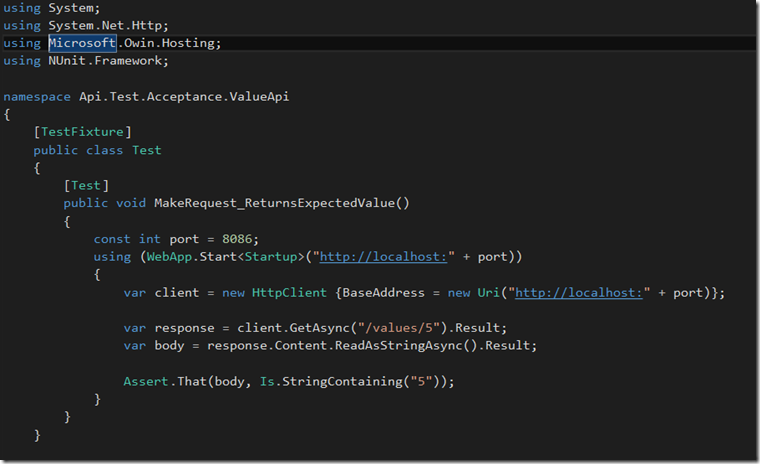

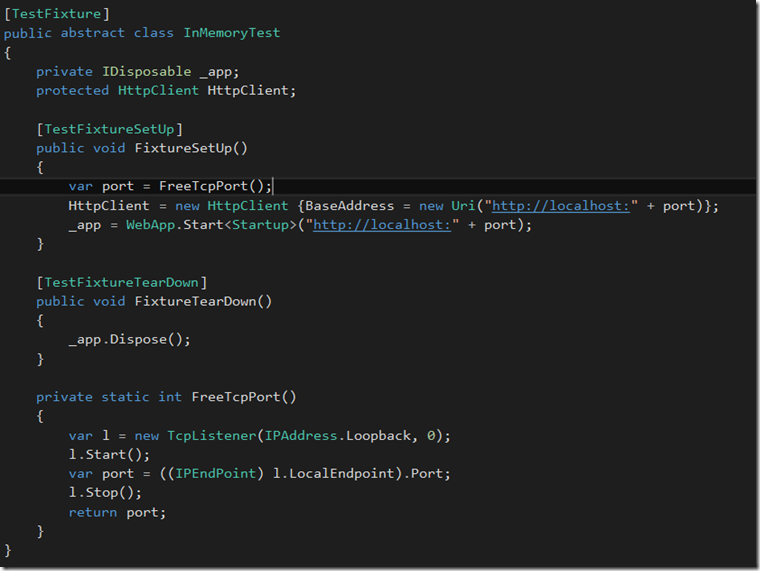

In order to put together a projection and make sure that it’s realistic, you need a base-line – some proven and known information to use as a basis of the projection.

You’ll need a few things

- A backlog of “epics” – or large chunks of work

- Some user stories of work you’ve already completed

- A record of the actual time taken to finish that work

- A big wall

- Some sticky notes

With these things we’re going to

- Establish a baseline from the work we’ve already completed

- Place our completed work on the wall using sticky notes

- Estimate our new work in relation to our completed work

- Assign our new work a rough time-box

A note on scales of estimation

Agile teams generally use abstract estimation scales to plan work – frequently using the modified Fibonacci sequence or T-shirt sizes. It’s recommended to use a different scale than you would normally use for story estimation for your projections, to prevent people accidentally equating the estimates of stories in a sprint, to estimates on epics in a roadmap.

I find it most useful to use Fibonacci for scoring user stories, and using t-shirt sizes for projections.

The trick to a successful projection is to find a completed epic or big chunk of work to “hang your hat on” – you can then make a reasonable estimate on new work by asking the question

“Is this new piece of work larger or smaller than the thing we already did?”

This relative estimation technique is called stack ranking – it’s simplistic, but very easy for an entire team to understand.

Take this first item, write it on a sticky note and position it somewhere in the middle of the wall, making a note of the time it took to complete the work as you stick it up.

You should repeat this exercise with a handful of pieces of work that you’ve already completed – giving you a baseline of the kinds of tasks your team performs, and their relative complexity to each other based on their ordering.

As you stick the notes on the wall, if something is significantly more difficult than something else, leave a bit of vertical space between the two notes as you place them on the wall.

Now you’ve established a baseline around work that you’ve already completed, you can start talking – in broad terms, about the large stories or epics you want to projects and schedule.

Work through your backlog of epics, splitting them down if you can into smaller chunks, and arranging them around the stack ranked work on your wall. If something is about as hard as something else, place it at the same level as the thing it’s as hard as.

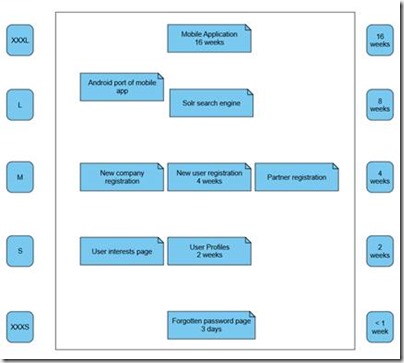

Once you’ve placed all of your backlog on the wall, arrange a time scale down the right hand side of the wall, based on the work you’ve already completed, and a scale from “XXXS” to “XXXL” on left hand side.

The whole team can then adjust their estimates based on the time windows, and the relative sizes assembled on the wall. Once the team is happy with their relative estimates, they should assign a T-shirt size to each sticky note of work – which in turn, relates to a rough estimate of time.

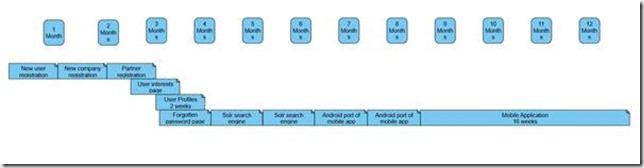

You can now remove the sticky notes from the wall, and arrange them horizontally into a timeline using the “medium size” sticky note as a guide.

These timelines are based around a known set of completed work, but none the less, are just projections. They’re not delivery dates, they’re not release dates – they’re best guess estimates of the time window in which work will be completed.

Adjusting your projections

Projections are only successful when continually re-adjusted – they’re the early warning, a canary in the coal-mine, a way to know when you’re diverging from your expectations. Treating a projection like a schedule will cause nothing but unmet expectations and disappointment.

As you complete actual pieces of work from your projection, you should verify its original projection against its actual time to completion – making note of the percentage of over or under estimation on that particular piece.

Once you complete a piece, you should adjust your projection based on actual results – checking that your assertion that the “medium sized thing takes three months” holds true.

Once armed with real information, you can have honest conversations about dates based in reality, not fiction, helping your product owners and stakeholders understand if they’re going to be delivering earlier or later than they expected, before it takes them by surprise.